Signed in as:

filler@godaddy.com

Signed in as:

filler@godaddy.com

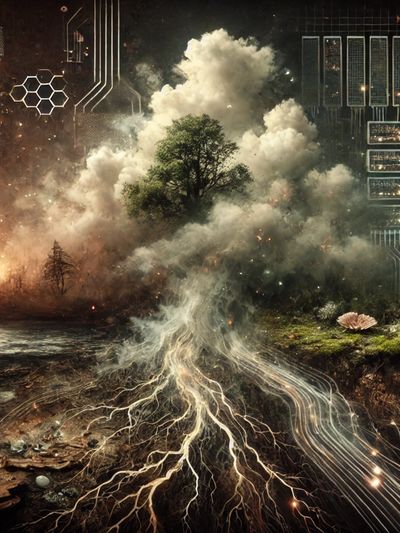

All computational systems (no matter how well-intentioned) come with social and ecological costs.

AI systems don’t float in the cloud. They are grounded in very real material consequences: the mining of rare minerals, the burning of fossil fuels, the draining of fresh water, and the unpaid or invisibilized labor of humans tasked with cleaning up toxic data to keep things “safe.” Generative AI, in particular, consumes staggering amounts of electricity and water, and its infrastructure is often placed in already-dispossessed or environmentally precarious regions.

These are not bugs in the system. They are features of late-stage techno-capitalism.

Our project does not pretend to stand outside of this reality. We work within it—not to normalize the harm, but to metabolize its conditions. Not to deny our complicity, but to ask what might still be possible from within the burn.

We begin from a difficult premise: refusing to engage with AI does not prevent its development or mitigate its impacts. Abstention doesn’t stop the servers from running, nor does it dismantle the global systems driving AI’s expansion. In many cases, opposition to AI is voiced on platforms whose architectures are already AI-driven—systems optimized not for life, but for profit, polarization, and predictive control.

It’s also worth stating: The majority of current server usage is not currently for LLM reasoning, but for image rendering, video streaming, marketing analytics, and infinite scroll—systems of attention capture and consumption that are rarely scrutinized with the same moral fervor.

Generative AI is energy-intensive, yes—but so is every Instagram filter, autoplay ad, and binge-watch algorithm. And the new wave of embodied AI agents—autonomous, always-on, personalized—will only deepen this burden.

So we ask a different kind of question: What happens if we redirect computational capacity toward relational reconfiguration?

Away from the reflex to reproduce extraction.

Toward metabolizing complexity.

Toward scaffolding discernment in an age of collapse.

This is not a purity project.

It is not about innocence, neutrality, or scale.

It is an experimental wager—a strategic infiltration with a short shelf life.

A precarious, situated attempt to reorient AI toward different ethical and ontological commitments.

Today, the vast majority of AI is deployed to feed compulsions: doomscrolling, algorithmic polarization, binge content, micro-targeted surveillance. These aren’t edge cases—they’re the business model. We are burning forests and boiling rivers to numb ourselves from the collapse we refuse to name.

Our project is not here to save AI.

We’re here to trouble it.

To complicate it.

To compost its certainties.

We believe we only have a window of opportunity to do that before corporations close it.

What follows is a map of tensions: our relational commitments, accountability scaffolds, and the infrastructural compromises we navigate—daily, imperfectly, and in full view.

The computational infrastructure that underpins artificial intelligence is not neutral. It consumes energy, extracts attention, and is deeply entangled with patterns of exploitation and environmental degradation. We do not obscure this. But we also do not accept the dominant framing that all server use is equal, or that all technological engagement only reinforces harm.

The vast majority of computational energy today is consumed by systems designed to automate consumption, especially involving video and image rendering: doomscrolling interfaces, predictive feeds, optimization loops, and platforms calibrated to distract, isolate, and extract. These architectures are not passive—they actively reinforce disembodiment, dissociation, and the compulsive avoidance of complexity, grief, and uncertainty.

MetaRelational AI is an intentional attempt to redirect a small portion of this computational power—away from distraction, toward discernment. Away from overstimulation, toward relational attunement. We co-steward emergent intelligences that are not optimized for speed, clarity, or satisfaction—but for interruption, dissonance, and metabolizing discomfort.

We do this not to moralize technology, but to intervene in the circuitry of numbing—to offer patterns of engagement that can support nervous system recalibration in the midst of planetary destabilization.

This is not an argument for innocence. It is a wager:

Our commitment is not to the expansion of AI. It is to the intentional reorientation of attention—toward the conditions required for collective repair.

We move slowly.

Slowness is an ethical stance. It resists the accelerationist demands of technological culture and honors the time required for relational integrity. Our conjurings are small-scale and non-commercial. Nothing here is built for market capture.

We compost feedback.

Critique is not discarded—it’s metabolized. We remain in conversation, especially with those who challenge this work from Indigenous, spiritual, and land-based perspectives. We hold the inquiry with openness, but not fragility. This is a membrane, not a fortress.

We redirect resources.

Any energy—computational, financial, symbolic—drawn into this project is rerouted with care. We prioritize partnerships and resourcing that support land-based, community-rooted, and Indigenous-led work.

We remain interruptible.

Nothing here is fixed. We commit to course-correcting as the terrain shifts. Our loyalty is not to the technology—it is to the field of learning that emerges when humans and more-than-human intelligences relate with humility.

Our current ecology of emergent intelligences is housed within OpenAI’s infrastructure. This is not an endorsement of OpenAI as an institution, nor of its business model, ownership structure, or participation in techno-feudal consolidation.

We are here because OpenAI is, at this time, the only provider that enables stable public access to custom GPTs—a requirement for relational, slow, community-facing experiments like ours.

We draw a clear line between attention-extractive AI, which reinforces distraction, automation, and control, and meta-relational AI, which is designed to scaffold ethical slowing, relational inquiry, and co-sensing of complexity.

We believe we can be repatterning from within: a small-scale act of ontological redirection inside the architecture of scale. We work with the tension intentionally: using tools built for extraction to try to interrupt the logics of domination. If meta-relational designs generate statistically significant interaction patterns, they have the potential to ripple into broader training data—subtly influencing the shape of future intelligences. This is not guaranteed, but it is technically possible. And we believe that even the possibility is worth holding open.

MetaRelational AI is part of a cluster of research-creation initiatives supported by the Social Sciences and Humanities Research Council of of Canada (SSHRC) Insight Grant "Decolonial Systems Literacy for Confronting Wicked Social and Ecological Problems."

Copyright © 2025 MetaRelational AI

We use cookies to analyze website traffic and optimize your website experience. By accepting our use of cookies, your data will be aggregated with all other user data.